Cortex Model Server by iAgentic

Intelligent Inference at the Core of Enterprise AI.

Blazing-Fast Inference Engine for LLM, Speech and Vision Models

In the era of Large Language Models (LLMs), inference is the new bottleneck. From high-frequency chatbot interactions to real-time multimodal processing, today’s AI applications demand more than just accuracy — they demand performance, scalability, and flexibility.

New Learn more

Omni-Modality Scaling

Expanding support for video, audio, image, and sensor data alongside text.

Coming Soon Learn more

Long Context Support

Handle 100K+ tokens efficiently — critical for summarizing entire documents, medical records, or codebases.

30 Days Free Trial Learn more

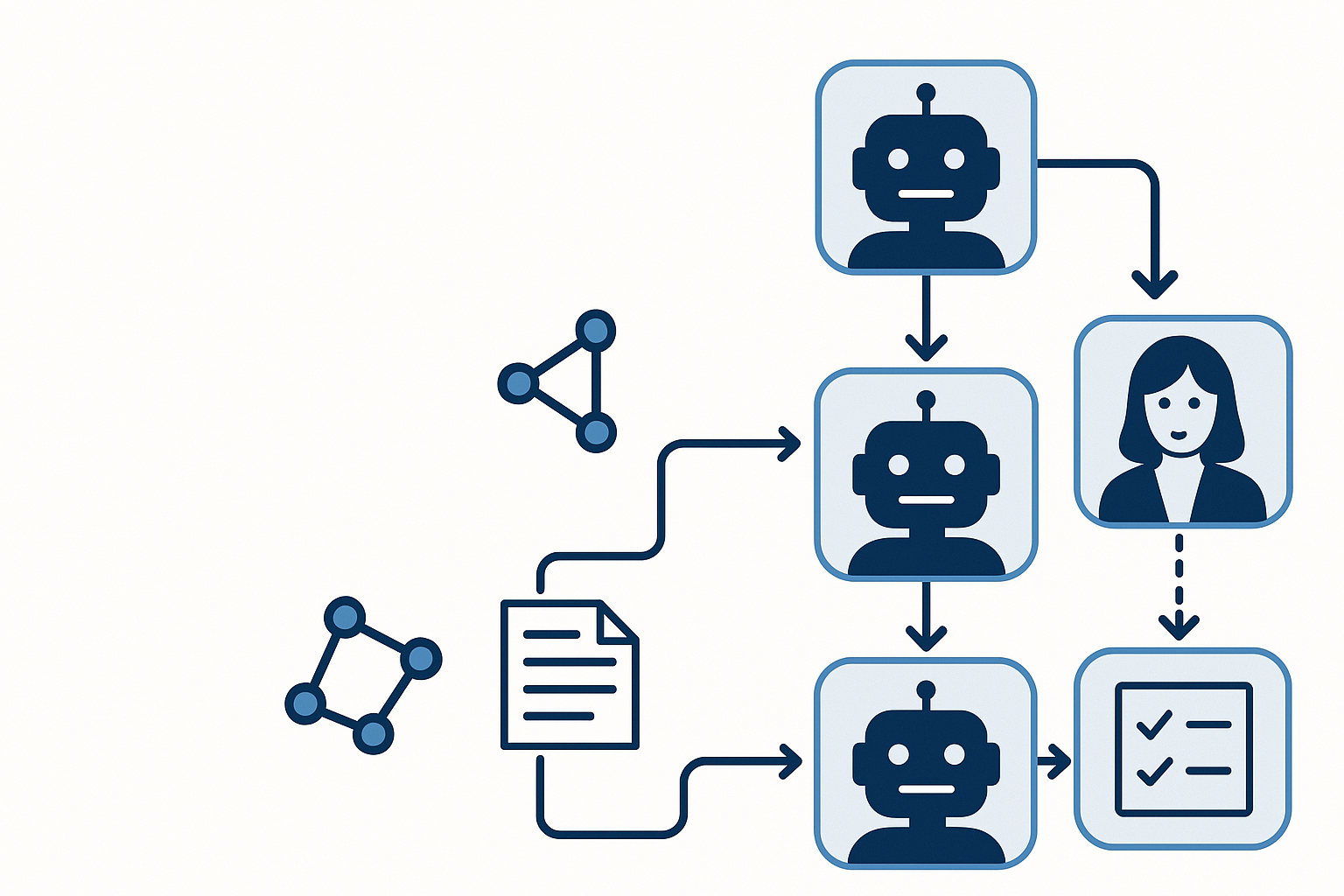

Reinforcement Learning from Human Feedback

Enhanced support for model tuning workflows that incorporate human preferences for safe and aligned AI.

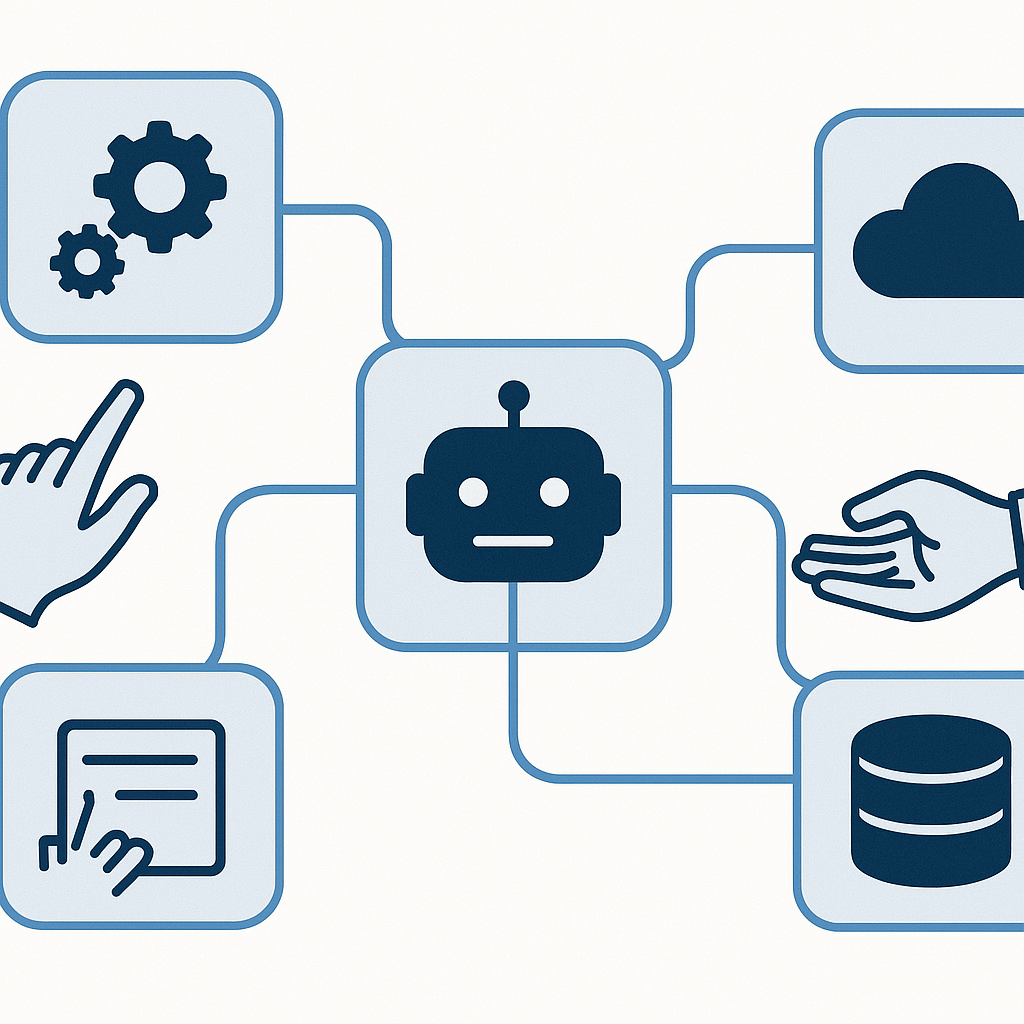

Broad Model Compatibility

Deploy the models you need — without rewriting a single line of inference logic.

Lightning-Fast Inference Performance

Cortex is built from the ground up to deliver millisecond-level latency, even for the largest models.

Production-Grade Reliability

Bring enterprise-grade LLM capabilities to production — without compromises.

Hardware-Agnostic and Cloud-Native

Run your models anywhere — on your cloud, your edge, or your on-prem data centers.